∞ As I've been writing about life extension, a cure for aging, etc., over the last few weeks I've been struck (again) by the fact that I personally am now too old to have much prospect of benefiting from any truly radical technological breakthroughs that would greatly extend human capacities or the maximum human life span.

I'm very grateful for the modern techniques of medicine and dentistry (as well as good nutrition and moderate exercise) that have kept me relatively youthful into middle age, now I find myself here, and I expect to obtain some benefits from further technological developments when I reach later middle age and old age. But I'm under no illusion of being in the first generation of immortals. More likely, I and the baby boomers and GenXers who make up most of my friends are not even the last of our world's mortal generations.

My intellectual opponents seem consistently unable to grasp how radically things will change in the next 100, and certainly the next 1000 or 10,000, years. They massively underestimate the cumulative effects of scientific, technological and economic change. There is a failure of imagination here. I envisage that humankind will be radically transformed by technology, in different ways, over each of those time frames and beyond; this seems inevitable, unless there is some global catastrophe, and it is also (generally) desirable. The point is to try to influence how it starts to unfold. But at least some transhumanists and other futurists seem to be fooling themselves by massively overestimating what will be possible in their own lifetimes.

Surely, if we are realistic, we should not be too focused on narrowly selfish benefits from technological change. I see nothing wrong with getting whatever opportunistic benefits we can - I don't advocate any theory of impartial, self-denying morality. Yes, selfishness gives us one good motive to defend, say, embryonic stem cell research against irrational attacks. But the gains for me, personally, will be relatively marginal - perhaps a few extra years, perhaps a few extra good ones. The main thing is to have a positive effect on the probability that those who come after me will enjoy a smooth takeoff to whatever strange future awaits them.

That's really what I'm in this for. That's why I'm a philosopher and a futurist. That's what gives the satisfaction. ∞

About Me

- Russell Blackford

- Australian philosopher, literary critic, legal scholar, and professional writer. Based in Newcastle, NSW. My latest books are THE TYRANNY OF OPINION: CONFORMITY AND THE FUTURE OF LIBERALISM (2019); AT THE DAWN OF A GREAT TRANSITION: THE QUESTION OF RADICAL ENHANCEMENT (2021); and HOW WE BECAME POST-LIBERAL: THE RISE AND FALL OF TOLERATION (2024).

Saturday, May 27, 2006

Friday, May 26, 2006

More thoughts on killing and life extension

I still owe an explanation for my rejection, in a previous discussion, of the strong claim that it is always wrong to shorten the life of a human person.

This claim might also be expressed by saying that human persons have an absolute right to life - meaning, I take it, that each of us is under a comprehensive moral obligation not to take the life of any human person. On this formulation, any such action would be the wrongful infringement of someone's right to continue living (and no such action would be, for example, a morally permissible infringement of a right, analogous to a necessary act of theft to save somebody from great and imminent danger).

Note, at this point, that I am confining my discussion to a relatively plausible class of cases - those involving human persons, i.e. beings who possess such properties as rationality, self-consciousness, and a sense of themselves as existing in time, with a past and a future. If the class of cases under discussion were defined by mere species membership, rather than personhood, any claim of absolute rights to life, or inflexible duties not to kill, would be implausible from the beginning.

However, even if we confine ourselves to human persons and develop the discussion in terms of rights, the claim that there is an absolute right to life cannot be sustained. There are too many easily-imaginable situations, such as in some of the notorious "Trolley cases", beloved of philosophers, where most of us would judge that killing a human person is the morally right action, perhaps to save others, or to achieve some other great utilitarian benefit.

At the same time, utilitarians and other consequentialists do not accept the existence of rights at all, except as conventions or rules of thumb; nor do they normally accept inflexible rules. For them, everything depends on what course of conduct will have the best consequences, seen by utilitarians as the maximisation of happiness or preference satisfaction. Even rule-utilitarians will adopt only those rules that have a prospect of producing the best consequences within a specified social, economic, etc., context.

To the extent that I put particular weight on a moral rule against killing, it is in response to the widespread human fear of death, particularly sudden, violent death at the hands of other human beings. This fear is deep in our nature. When we sense this fear in other people, it tugs at our sympathies, while the reality of others' capacity for violence in the service of social or economic gain necessitates laws against the kinds of acts that we categorise as murder. It is unsurprising that murder is forbidden - or at least drastically restrained and regulated - in all cultures.

But these sorts of utilitarian, Humean, or Hobbesian reasoning get us a rule that is less than absolute, and which is always subject to specification and qualification according to the relevant historical circumstances. Indeed, all of our recognised moral rules are useful only in so far as they meet important human interests and needs in the sorts of circumstances that shaped the rules in the first place. Until now, those circumstances have universally included such facts as that we are mortal, that most people who live into their 80s become very frail, however robust they formerly were, that it is almost unheard of for people to live to 120, and so on.

If we are now considering technological changes that could greatly alter the human condition, and with it the context within which moral rules evolve and moral intuitions are shaped, it is not at all obvious that the existing moral rules can be retained in their entirety. On the contrary, it is easy to imagine science fictional societies in which killing people when they reach the age of 300, or even at the age of 100, is justifiable on utilitarian grounds - though whether such scenarios actually seem at all plausible might depend on fiercely contested perceptions of human nature, such as conflicting beliefs about the inevitability of ennui and despair if one lives long enough.

Even before we find ourselves in such a futuristic setting, all bets may be off. If we are now in a world where anti-aging technologies are a real prospect, that is already a different world from the one in which our intuitions about the wrongness of killing were formed. If historical assumptions about human decline and mortality can no longer made, our moral norms may need to change. We cannot take a radical view of what changes are possible, while taking a conservative view that our current norms remain appropriate to the altered situation. This makes moral and policy debates about emerging technologies very tricky.

Assume for the sake of argument that failing to provide the general population with an immortality drug, if one became available, could be considered equivalent in some sense to killing the individuals who are so deprived. Even if we get to that point, it is not clear that this sort of killing would be morally wrong. It depends on what would be the actual effect of having a population of people who use an immortality drug. If the outcome would be some kind of disastrous conflict for resources, or some kind of widespread, catastrophic unhappiness, withholding the drug might be justified.

Thus, bioconservatives such as Francis Fukuyama, who evidently expect dreadful outcomes are being rational, in their fashion, in claiming that society and the state are morally entitled to tell us how long we may live. Of course, it is a shocking kind of rationality. I immediately want to add that the last thing I want to do is join Fukuyama in handing such a power to the state - any state, no matter how democratically accountable. To be clear, I am suggesting that there is no absolute right to an immortality drug, even if we had one, or for some more plausible "cure for aging". But at the same time, I want to stress what kind of argument has to be run if there is to be an intellectually credible case against technologies that would extend human life.

It appears to me that the burden lies heavily on the opponents of life extension to develop such a case, because nothing I've said above (or anywhere else) denies that longer, healthier lives, and particularly the radical extension of our times of physical robustness and mental clarity, are all very attractive. In fact, that is just the point. We should be supporting technological advances that will give us these things - basing our case squarely on the fact that they are things which really are attractive to beings like us, that it is rational for us to want them, and conversely that it is rational to struggle against the process of ontological diminution (David Gems's useful term) that comes with aging. Indeed, supporting research that could deliver these benefits would be a rational and appropriate use of public funds.

Opponents of life extension have little prospect of success unless they can make out a case that the probable result is something horrible. I am not afraid to concede that such a case could be made in principle, but let's see if it can be sustained in practice. Transhumanists and life extension advocates won't have everything their own way in a debate over this issue, but that's because it is far easier (and far easier to be be taken seriously) to present as a pessimist about the future, referring to valued things that could change or be superseded, than to be an optimist, attempting to picture a better future in convincing detail.

In the end, however, I expect that the bioconservatives' case will not be made out intellectually and will have only short-term success in gaining converts. Perhaps ironically, their problem is that their aims run against that thing they hold sacred - human nature - for it is in our nature to change things, including ourselves if we can, to bring them closer to the heart's desire.

Wednesday, May 24, 2006

Anti-aging, again

In an earlier blog entry, I presented my reconstruction of an argument, developed by Aubrey de Grey, that attempts to demonstrate the existence of a moral obligation to fund radical anti-aging research, i.e. research aimed "curing" the human aging process. In this entry, I'm going to identify a problem for the argument, and I'll then comment on the implications. I also think that there's an additional problem, but I'll merely mention it, and leave it for later time.

In an earlier blog entry, I presented my reconstruction of an argument, developed by Aubrey de Grey, that attempts to demonstrate the existence of a moral obligation to fund radical anti-aging research, i.e. research aimed "curing" the human aging process. In this entry, I'm going to identify a problem for the argument, and I'll then comment on the implications. I also think that there's an additional problem, but I'll merely mention it, and leave it for later time. Curing?

First, I have placed the word "curing" in inverted commas to signal that I am well aware of the argument that the word "cure" and its cognates can legitimately be used only of a disease or disorder that interferes with an organism's species-normal functioning. According to this argument, aging is "normal", and so cannot be "cured". Having adverted to this, I must add that I think it's unhelpful to the debate. I find the distinction between what is normal and otherwise, or between what is therapeutic and what is "enhancing", rather problematic. I don't deny that such distinctions can be made coherently in some contexts, but even in those contexts their moral significance is unclear.

Anyway, de Grey's meaning is clear enough: he wants to find a way to stop, or even reverse, the aging process. His argument - the argument I've been considering - does not depend on the strong premise that aging is closely analogous to a disease, and when he uses the word "cure" it can be interpreted without that connotation. Admittedly, it connotes the idea that aging is something undesirable, something whose conquest we would rightly celebrate. Again, however, nothing in the argument actually assumes this.

Formalising the argument

Here, again, is my attempted formalisation of the argument:

Sub-argument one

P1.1. If we provide substantial funding for radical anti-aging research, then biomedical science will develop technologies capable of stopping, and possibly reversing, the process of aging.

P1.2. If biomedical science develops technologies capable of stopping, and possibly reversing, the process of aging, those technologies will be widely used.

P1.3. If biomedical technologies capable of stopping, and possibly reversing, the process of aging are widely used, then at least some human persons will have their lives extended beyond what would otherwise have been their duration.

C1. If we provide substantial funding for radical anti-aging research, then some human persons will have their lives extended beyond what would otherwise have been their duration.

Sub-argument two

P2.1. (C1.) If we provide substantial funding for radical anti-aging research, then some human persons will have their lives extended beyond what would otherwise have been their duration.

P2.2. If we do not provide substantial funding for radical anti-aging research, then some of those human persons will not have their lives extended beyond what would otherwise have been their duration.

C2. If we do not provide substantial funding for radical anti-aging research, then we will fail to extend the lives of some human persons beyond what would otherwise have been their duration.

Sub-argument three

P3.1. There is no morally salient distinction between shortening the life of a human person against that person's will and failing to save the life of such a person.

P3.2. There is no morally salient distinction between saving the life of a human person and extending the life of such a person beyond what would otherwise have been its duration.

C3. There is no morally salient distinction between shortening the life of a human person and failing to extend the life of such a person beyond what would otherwise have been its duration.

Sub-argument four

P.4.1. It is morally impermissible to shorten the life of a human person against that person's will.

P4.2. (C3.) There is no morally salient distinction between shortening the life of a human person and failing to extend the life of such a person beyond what would otherwise have been its duration.

C4. It is morally impermissible to fail to extend the life of a human person beyond what would otherwise have been its duration.

Main argument

P1. (C2.) If we do not provide substantial funding for radical anti-aging research, then we will fail to extend the lives of some human persons beyond what would otherwise have been their duration.

P2. (C4.) It is morally impermissible to fail to extend the life of a human person beyond what would otherwise have been its duration.

Conclusion. If we do not to provide substantial funding for radical anti-aging research, we act in a way that is morally impermissible.

Analysis

As I've stated previously, it's not immediately obvious where, if anywhere, this goes wrong. If the argument is not strictly valid, that looks easily fixed. I also think that all the premises relied on in the first two sub-arguments are plausible enough to justify acceptance. If the argument fails, it has to be because at least one of the following is unacceptable: P3.1., P3.2., and P4.1.

Those premise are critical because they support a controversial premise ultimately relied on in the main argument:

P2. (C4.) It is morally impermissible to fail to extend the life of a human person beyond what would otherwise have been its duration.

It appears clear that that needs support. It is a very strong statement to make, given that it is meant to include extensions of lives beyond their so-called "natural" durations. Someone who simply asserted this claim, without any supporting argument, and then relied on it to support anti-aging research, would fail to persuade most rational opponents. The difficulty here is that it is the kind of premise that would be denied, quite comfortably, by most opponents of anti-aging research. It certainly does not seem to be a recognisable principle adopted in commonsense morality; this is understandable, since it does not relate to the kind of everyday situation that commonsense morality deals with.

Of the premises in its support, P3.1. and P3.2. look controversial:

P3.1. There is no morally salient distinction between shortening the life of a human person against that person's will and failing to save the life of such a person.

AND

P3.2. There is no morally salient distinction between saving the life of a human person and extending the life of such a person beyond what would otherwise have been its duration.

By contrast, P4.1 looks straightforward:

P4.1. It is morally impermissible to shorten the life of a human person against that person's will.

However, things are seldom what they seem.

Premises 3.1 and 3.2 are the sorts of claims that utilitarians and other consequentialist moral philosophers are likely to accept. Such theorists are likely to take the following view: if the consequences are the same, e.g. Xavier dies an early death, Yasmine lives to a ripe old age (or beyond), or Zeke suffers a year of horrible pain, the rightness or wrongness of the conduct is also the same, irrespective of whether the conduct is classifiable as, for example, an act or an omission.

However, I doubt that we should accept this analysis, despite its popularity in the academy, and I think that there is a point to commonsense morality's distinction between acts and omissions.

The actual codes of morality that govern societies (what is sometimes called their positive morality) do not function to require the individuals concerned to maximise the happiness, or preference satisfaction, of all, whether quantified across all citizens of the society, all beings with interests of any kind, or any other set of plausibly relevant beings. Positive moralities seem to have much less ambitious functions, at the core of which is the imposition of constraints on the degree to which we are at liberty to conduct ourselves as positive dangers to each other's livess and bodily integrity. While morality may provide for the kind of solidarity, in a dangerous world, that connects with a duty of rescue, even the failure to carry out very easy rescues is seldom considered to be equal in seriousness with active attacks. A compelling explanation for this is that the restraint of actual violence (or analogous kinds of attacks) against other human beings is essential for social life. By contrast, it would at least be possible to have a morality that imposes no general right to be rescued - i.e., expecting people to rely on their own resources when danger does not come from the violent attack of a fellow citizen in pursuit of social or economic gain, but from some other source.

Seen from this perspective, P3.1 and P3.2 are quite problematic. It may still be a moral requirement that we rescue people from danger, or even extend their lives, but the requirement may be hedged around considerably. Failing to rescue is not treated in the same way as stabbing, electrocuting, or poisoning, and there may be many questions about how much effort or resource expenditure, or other cost, is involved in saving someone whose need for rescue has arisen independently of your own actions. Thus, rescues and life extensions may have some moral importance, but they are usually not seen from the viewpoint of positive morality as obligations of the very highest order. With positive attacks, by contrast, no degree of possible gain to the individual concerned is considered to be a good justification; the idea is to remove acts of violence from the repertoire of competitive actions that are socially tolerated.

Furthermore, I am not simply assuming, in the manner of vulgar moral relativists, that we must all defer to the positive moral systems of our respective societies. On the contrary, my point is that the relevant distinctions made by actual moral systems may well be justifiable, if morality is looked on as something more like a social contract for mutual gain, and less as something like a total scheme for maximising general utility on an impartial basis.

Because of my fundamental understanding of the nature and function of morality, which I've only sketched here (and without claiming that something like Hobbesian social contract theory gives a complete account of morality), I am inclined to reject P3.1. I also think that P3.2 may be difficult to defend - though de Grey makes a forceful attempt - but it is not necessary to critique it separately.

If we reject P3.1, the argument fails to establish that merely not funding anti-aging research is morally comparable to shortening people's lives. That is enough to show that the argument fails. If even one premise on which the conclusion relies is unacceptable, then we do no have a logically sound argument on our hands. The argument fails to demonstrate that there is any obligation here comparable to the negative obligation not to commit acts of murder. In one sense, of course, that is an unsurprising result. I expect that most people would find such a claim quite counterintuitive. Interestingly, though, this result was reached by applying a methodology that I am independently committed to. It shows the explanatory power of that approach.

Meanwhile, what about P4.1? I don't think it is acceptable. Indeed, utilitarians in particular should reject it. While there may normally be very strong moral obligations to avoid shortening human lives, this observation needs to be qualified in many ways, and the qualifications that are relevant to debates about curing aging, and questing for the grail of biological immortality, may be very significant. That, however, is a subject too large to pursue before I head to bed tonight. It will have to await my next blog entry on the subject.

Some final comments

I do not intend to deny that contributing to anti-aging research is morally praiseworthy, or even that it may turn out to be morally obligatory in current circumstances when all facts are known and all things finally considered. It's just that the nature of any moral obligation has not been demonstrated to be of the same high (if less than absolute) order as the obligation to avoid murdering other human beings. Thus, the force of any such obligation might need to be weighed against other claims on resources, possible unwanted side effects, and so on. Anti-aging research may still be worth paying for through the tax system, but a quite different sort of argument will have to be made as to why this is good policy in the whole range of circumstances that now confront us.

Apart from the sheer intellectual stimulus involved, it gives me no great pleasure to criticise particular arguments in support of funding for anti-aging research, because I am actually in favour of such funding. However, it looks to me right now as if arguments that attempt to establish an overwhelming moral obligation, similar to the obligation not to murder, are doomed to failure. The problems I've identified above will probably affect all arguments of that kind.

If I'm right about that, advocates of anti-aging research may have a more arduous and less palatable task than is immediately apparent. It will involve facing questions about whether human desires to resist aging, and preserve youthful health and robustness, are rational, and whether they are worthy of being satisfied for their own sake. My claim is that these desires can, indeed, survive scrutiny, and that it takes an unattractive, if not uncommon, kind of puritanism to want to deny them. Bioconservative moralists of the left and right, who typically dismiss such desires as narcissistic or hubristic, are on shaky ground. However, making out this case will require a different style of argument.

I'll return to that issue another time - probably often.

Tuesday, May 23, 2006

Uvvy Island

I'm looking forward to the launch of Uvvy Island on 7 June - this being the island that Giulio Prisco has created, within the popular Second Life virtual reality world, as a haven for transhumanists and technology enthusiasts to work creatively together. At least, I gather that's the idea. Guilio has obviously put enormous effort into this.

James Hughes will be speaking on "Transhumanism: The Most Dangerous Idea?" Giulio Prisco and Philippe Van Nedervelde will also be speaking: "Transhumanist Technologies on the Horizon and Beyond"; and "Virtually Real Virtuality". Naturally, I'll blog my report on how it all goes.

I do like the thought of a bunch of future-oriented thinkers being able to meet in a small, sunlit corner of virtual reality - however primitive VR may be at the moment - to take stock, share ideas, and make plans. There's plenty of potential as the technology gets more sophisticated, and the use of virtual reality forums could well have practical advantages for someone working in Australia who doesn't get a lot of opportunities to meet these people face to face. Besides, it all has a healthy appeal to the big kid in me. We don't have to be sombre all the time.

The simple architecture of the island is cool to fly around and check out. In a couple of the uploaded pics, you can see "me" exploring the auditorium of the central building, where the launch will take place. I've also scoped out the rest of the island, including the cosy meeting hall for IEET.

I'll just be listening this time, I guess, but I'll be there in my Second Life guise as "Metamagician Apogee" (I chose the surname from the limited list that Second Life offered me when I registered, but it seems appropriate). Well, what the heck - there's also supposed to be a party after the talks.

Hmmm, this is going to be fun.

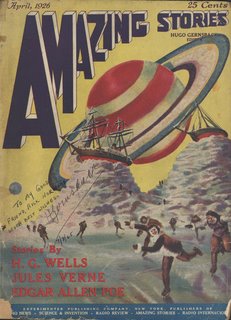

Another birthday - of a kind (science fiction turns 80)

Any claim about the origin or definition of science fiction is inevitably controversial - questions about the boundaries of the genre, and the first appearance of something that was distinctively sf, are endlessly debated by writers, critics and fans. Did science fiction begin early in the 19th century, with the publication of Mary Shelley's Frankenstein? Or perhaps in the 1890s, with the appearance of H.G. Wells's first great scientific romances? But what about the earlier work of Edgar Allan Poe and Jules Verne? Perhaps the genre can be traced back to earlier times, when precursor works appeared - but how much like the real thing does a precursor have to be if it is to count?

My favourite date is 1895, but a good argument can also be made for 1926, in which case science fiction has just turned 80. On this account, the work of Verne, Wells, etc., was retrospectively included in a genre that really crystallised as a social phenomenon with the publication of the first specialised magazines, notably Hugo Gernsback's Amazing Stories. That theory may be as good as any. It is certainly worth noting that the modern sf genre has a social history that is continuous in many ways with the professional work that first appeared under the editorship of Gernsback and later John W. Campbell. What earlier fiction was seen as belonging to the genre was largely defined by those great early editors.

Of course, I actually take the view that there's no simple fact of the matter of when science fiction started - we have various facts about when works of certain kinds began to appear, what social and professional connections existed, and so on. There is no further fact as to which of these events was "really" the beginning - that's more a question of semantics and personal emphases, and of what historical perspectives are illuminating in different contexts. But there's no doubt that 1926 was an important year in the science fiction genre's history, so let's take note and pour a glass in celebration.

Monday, May 22, 2006

Second Life - this could get addictive!

Some of my colleagues at IEET, particularly Giulio Prisco, have used the Second Life reality to set up a virtual-reality meeting space, so I've registered there and am trying to figure out how it all works.

Learning how to control an avatar, making it walk, fly, or teleport around this alternative reality, is a challenge at first. It's also a challenge just designing an avatar. I've been trying, with my limited artistic talent, to create a somewhat idealised (of course) but faintly-recognisable image of myself. I think enough is enough, and maybe I should stick with the current version.

Giulio took me on a virtual tour of the dives of Amsterdam the other day, but I haven't yet figured out how to soar and roam by myself beyond the safe space of the island where IEET has its meeting hall. I can already see, however, how this could get addictive.

Sunday, May 21, 2006

Monsters of Eurovision

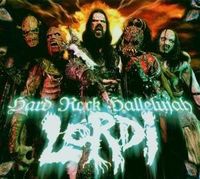

I have a secret (well not any more) addiction to the annual Eurovision Song Contest. I love the glamour, the kitsch costumes, the silly pop songs, the leggy young women with big hair and screechy voices. Strangely enough, the act I'm rooting for usually wins, and this year was no exception with the Finnish "monster rock" band, Lordi, taking the prize for "Hard Rock Hallelujah".

Saturday, May 20, 2006

Friday, May 19, 2006

Why not push beyond the boundaries?

Imagine that there are certain natural boundaries to human capacities, beyond which any increase is "enhancement", rather than "therapy". Does that mean we have a moral obligation to stay within those boundaries? I don't see why. Such reasoning seems like a clear case of the fallacy of claiming what "is" also "ought" to be.

For the sake of argument, leave to one side the problem that defining a therapy/enhancement boundary may often be an impractical task, and that it may defy coherent specification in some contexts.

Waiving that point, it appears that many human beings do, indeed, wish to go beyond the natural boundaries, or what look like them. If it were possible, many of us would like to obtain unprecedented levels of health, fitness, cognitive abilities, or whatever. So, why not? The fallacy, famously denounced by David Hume, of deriving an "ought" from an "is" is not a fallacy when part of the "is" relates to a desire or a fear. Accordingly, we may have reason to push beyond the boundaries. It is quite rational and reasonable to construct practical syllogisms such as the following:

P1. Xerxes desires to live to be 300.

P2. If Xerxes uses certain technological means, he can fulfil the desire specified in P1.

C. Xerxes ought to use the technological means specified in P2.

OR

P1. Zenobia fears a loss of mental acuity as she ages.

P2. If Zenobia uses certain technological means, she can avoid the feared thing specified in P1.

C. Zenobia ought to use the technological means specified in P2.

These are perfectly valid items of practical reasoning. Of course, the imperatives they establish are merely hypothetical ones - they give Xerxes and Zenobia reasons to act in the way prescribed if they actually have the desires or fears concerned. However, it is apparent that the horizons of many people's desires and fears do extend beyond what look like natural boundaries between the realm of therapy and enhancement. Why, then, should a relevant moral boundary be located there, assuming we can work out where "there" actually is? At first blush, the relevant boundary is surely that between the domain of what is desired, or feared, and what is beyond desire and fear. For many people, for example, immortality may not be directly desired (I'm not sure I desire it, because I find it hard to comprehend living forever), so there may be no reason for them to pursue it for its own sake, but extended health and robustness (physical and cognitive) are desired by almost anyone, so there is a good reason to pursue those things. (Of course, things that we don't directly desire may follow from pursuing things that we do desire, but that does not seem to be a problem - if the pursuit of better health leads to a much longer life that I did not directly desire, then no harm seems to be done.)

If we have good reasons to use technological means to fulfil certain desires, such as extended health and robustness, how could there be a moral imperative to forego the technological means? This is not meant as a rhetorical question; let's consider the possibilities.

For a start, it might be pointed out that we do not have reason to pursue just any old desire that we happen to have. After all, our desires might conflict with each other, or there may be reasons why we would want to disown some of them on giving them rational scrutiny. Likewise with our fears; perhaps on rational scrutiny there may be things that we fear that are not really against our interests and should actually be welcomed. Hence, I don't deny that some account is needed that distinguishes between the full range of desires and fears that we actually have and those that can be said to survive rational scrutiny, to be connected with interests, and so on. At the same time, I don't see how we can ever entirely step out of the totality of desires and fears that make up the starting point when we scrutinise our motivations. If I am going to judge some desire that I currently possess as irrational, it will eventually be on the basis that it inhibits me from pursuing things that are ultimately more important to me, or from taking steps to avoid things that I fear deeply.

To sum up at this point, I'd rather talk about things that we rationally fear and desire (or value, if this conveys the idea of desires that have been reflected upon) than simply about fears and desires, which may tend to suggest quite superficial levels of our motivation. However, as far as I can see, such an appeal to reason will not lead us to abandon fears of losing capacities, even at a "natural" time in our lives, or abandoning desires to increase them.

More generally, there may be many reasons to act in ways that are not narrowly selfish - e.g. by cultivating such virtues of character as kindness and loyalty, by eschewing attempts to get our way in social competition by violence and fraud, etc. Such reasons may be those of long-term self-interest, those of sympathy for other beings, or those of rational reciprocity (giving up the liberty to act in certain ways if most other people do likewise and we are all better off as a result). Off-hand, it is not obvious that the categories of good sources for morality are closed. But nor will just anything count as a good source of morality. It will always be something to do with our rationally-scrutinised fears, desires, values, interests, and so on.

Bioconservatives can sometimes put a rational case to be cautious, especially in the short term, about a particular technology that poses individual or collective dangers. But bioconservatives have provided only the most flimsy attempts at any general justification for imprisoning our capacities within the boundaries of what is "given" by nature (even though it is in our nature to push beyond). Prima facie at least, if we desire transformations of our capacities then we have reason to pursue them by whatever means promise to be effective. If this leads to new desires at a later time, we or our successors will, in turn, have reasons to pursue those (for example, immortality may be something directly desired by a being with superior capacity to comprehend it).

There is nothing obviously wrong with this iterative and directional process of capacity transformation, and I cannot imagine how it can, or why it should, be halted indefinitely as bioconservatives often seem to want. Resistance to this process, when not aimed at specifiable, short-term dangers, is fundamentally irrational, and we have good reason to stand up and say so.

For the sake of argument, leave to one side the problem that defining a therapy/enhancement boundary may often be an impractical task, and that it may defy coherent specification in some contexts.

Waiving that point, it appears that many human beings do, indeed, wish to go beyond the natural boundaries, or what look like them. If it were possible, many of us would like to obtain unprecedented levels of health, fitness, cognitive abilities, or whatever. So, why not? The fallacy, famously denounced by David Hume, of deriving an "ought" from an "is" is not a fallacy when part of the "is" relates to a desire or a fear. Accordingly, we may have reason to push beyond the boundaries. It is quite rational and reasonable to construct practical syllogisms such as the following:

P1. Xerxes desires to live to be 300.

P2. If Xerxes uses certain technological means, he can fulfil the desire specified in P1.

C. Xerxes ought to use the technological means specified in P2.

OR

P1. Zenobia fears a loss of mental acuity as she ages.

P2. If Zenobia uses certain technological means, she can avoid the feared thing specified in P1.

C. Zenobia ought to use the technological means specified in P2.

These are perfectly valid items of practical reasoning. Of course, the imperatives they establish are merely hypothetical ones - they give Xerxes and Zenobia reasons to act in the way prescribed if they actually have the desires or fears concerned. However, it is apparent that the horizons of many people's desires and fears do extend beyond what look like natural boundaries between the realm of therapy and enhancement. Why, then, should a relevant moral boundary be located there, assuming we can work out where "there" actually is? At first blush, the relevant boundary is surely that between the domain of what is desired, or feared, and what is beyond desire and fear. For many people, for example, immortality may not be directly desired (I'm not sure I desire it, because I find it hard to comprehend living forever), so there may be no reason for them to pursue it for its own sake, but extended health and robustness (physical and cognitive) are desired by almost anyone, so there is a good reason to pursue those things. (Of course, things that we don't directly desire may follow from pursuing things that we do desire, but that does not seem to be a problem - if the pursuit of better health leads to a much longer life that I did not directly desire, then no harm seems to be done.)

If we have good reasons to use technological means to fulfil certain desires, such as extended health and robustness, how could there be a moral imperative to forego the technological means? This is not meant as a rhetorical question; let's consider the possibilities.

For a start, it might be pointed out that we do not have reason to pursue just any old desire that we happen to have. After all, our desires might conflict with each other, or there may be reasons why we would want to disown some of them on giving them rational scrutiny. Likewise with our fears; perhaps on rational scrutiny there may be things that we fear that are not really against our interests and should actually be welcomed. Hence, I don't deny that some account is needed that distinguishes between the full range of desires and fears that we actually have and those that can be said to survive rational scrutiny, to be connected with interests, and so on. At the same time, I don't see how we can ever entirely step out of the totality of desires and fears that make up the starting point when we scrutinise our motivations. If I am going to judge some desire that I currently possess as irrational, it will eventually be on the basis that it inhibits me from pursuing things that are ultimately more important to me, or from taking steps to avoid things that I fear deeply.

To sum up at this point, I'd rather talk about things that we rationally fear and desire (or value, if this conveys the idea of desires that have been reflected upon) than simply about fears and desires, which may tend to suggest quite superficial levels of our motivation. However, as far as I can see, such an appeal to reason will not lead us to abandon fears of losing capacities, even at a "natural" time in our lives, or abandoning desires to increase them.

More generally, there may be many reasons to act in ways that are not narrowly selfish - e.g. by cultivating such virtues of character as kindness and loyalty, by eschewing attempts to get our way in social competition by violence and fraud, etc. Such reasons may be those of long-term self-interest, those of sympathy for other beings, or those of rational reciprocity (giving up the liberty to act in certain ways if most other people do likewise and we are all better off as a result). Off-hand, it is not obvious that the categories of good sources for morality are closed. But nor will just anything count as a good source of morality. It will always be something to do with our rationally-scrutinised fears, desires, values, interests, and so on.

Bioconservatives can sometimes put a rational case to be cautious, especially in the short term, about a particular technology that poses individual or collective dangers. But bioconservatives have provided only the most flimsy attempts at any general justification for imprisoning our capacities within the boundaries of what is "given" by nature (even though it is in our nature to push beyond). Prima facie at least, if we desire transformations of our capacities then we have reason to pursue them by whatever means promise to be effective. If this leads to new desires at a later time, we or our successors will, in turn, have reasons to pursue those (for example, immortality may be something directly desired by a being with superior capacity to comprehend it).

There is nothing obviously wrong with this iterative and directional process of capacity transformation, and I cannot imagine how it can, or why it should, be halted indefinitely as bioconservatives often seem to want. Resistance to this process, when not aimed at specifiable, short-term dangers, is fundamentally irrational, and we have good reason to stand up and say so.

Genetic chimeras - and why give a damn about morality?

I just made some comments like the following in another forum, where my pal Damien Broderick was discussing the morality of scientific experiments that involve inserting human genes into non-human animals. Damien, quite rightly, fulminated against the bizarre essentialism implicit in some of the attacks on these experiments.

This provoked me to some thoughts about what morality is actually for. Why give a damn about it, as most of us obviously do?

I see morality as a social institution that we use to protect things that we rationally value, and protect us and other beings that we care about from things that we rationally fear. The word "rationally" and its cognates require a lot of glossing, of course, and that is part of my project when I'm wearing my hat as a philosopher. In practice, popular morality often protects us from things that don't seem all that scary, if we look at it in a hard-headed, yet soft-hearted, way. Some moral pronouncements that emanate from bioconservatives are much worse than popular morality; they appear to have lost contact totally with what morality is actually for. Morality does some good; there are contexts in which we need more of it. But it also has effects that it is rational for beings like us to assess as harmful.

I want to be clear that our fears and values don't have to be egoistic ones. I'd be fearful for poor little Ratty the super-rat if she was going to be despised and rejected like Frankenstein's monster, miserable about the love she never obtains from her kind, heartbreakingly frustrated in her efforts to grow up and attend Oxbridge, then become an airline pilot, etc. If all that is going to happen, let's not bring such an unhappy creature into the world. It would be an outcome to shock our sympathies, even if it never disrupted our society or harmed us directly.

But of course such a thing is not going to happen. It's not the sort of result you'd get inadvertently, or that scientists are building up to deliberately. There are a few people, such as another friend, James Hughes, who seriously champion the creation of "uplifted" animals, but the idea is that these animals would be well cared for, and even deferred to. I'm not sure that would be the practical outcome, but the issue here is essentially a simple consequentialist one. In any event, the production of superintelligent rats, cats, and elephants is not on the agenda of current genetic research.

As Damien says, the worries about genetic human/animal chimeras seem to imagine scenarios from Z-grade science fiction movies.

When looking at what moralists say, I like to ask myself a few straightforward questions. Does what they are inveighing against really disrupt the functioning of our society? If not, does it nonetheless shock our sympathies for other sentient creatures, or destroy something that we greatly value? If not, why exactly should I give a damn?

Frankly, there's a place for moralists - but there's also good reason to keep them in their place.

This provoked me to some thoughts about what morality is actually for. Why give a damn about it, as most of us obviously do?

I see morality as a social institution that we use to protect things that we rationally value, and protect us and other beings that we care about from things that we rationally fear. The word "rationally" and its cognates require a lot of glossing, of course, and that is part of my project when I'm wearing my hat as a philosopher. In practice, popular morality often protects us from things that don't seem all that scary, if we look at it in a hard-headed, yet soft-hearted, way. Some moral pronouncements that emanate from bioconservatives are much worse than popular morality; they appear to have lost contact totally with what morality is actually for. Morality does some good; there are contexts in which we need more of it. But it also has effects that it is rational for beings like us to assess as harmful.

I want to be clear that our fears and values don't have to be egoistic ones. I'd be fearful for poor little Ratty the super-rat if she was going to be despised and rejected like Frankenstein's monster, miserable about the love she never obtains from her kind, heartbreakingly frustrated in her efforts to grow up and attend Oxbridge, then become an airline pilot, etc. If all that is going to happen, let's not bring such an unhappy creature into the world. It would be an outcome to shock our sympathies, even if it never disrupted our society or harmed us directly.

But of course such a thing is not going to happen. It's not the sort of result you'd get inadvertently, or that scientists are building up to deliberately. There are a few people, such as another friend, James Hughes, who seriously champion the creation of "uplifted" animals, but the idea is that these animals would be well cared for, and even deferred to. I'm not sure that would be the practical outcome, but the issue here is essentially a simple consequentialist one. In any event, the production of superintelligent rats, cats, and elephants is not on the agenda of current genetic research.

As Damien says, the worries about genetic human/animal chimeras seem to imagine scenarios from Z-grade science fiction movies.

When looking at what moralists say, I like to ask myself a few straightforward questions. Does what they are inveighing against really disrupt the functioning of our society? If not, does it nonetheless shock our sympathies for other sentient creatures, or destroy something that we greatly value? If not, why exactly should I give a damn?

Frankly, there's a place for moralists - but there's also good reason to keep them in their place.

Tuesday, May 16, 2006

H+ ... Transhumanism article gets Featured Article status at Wikipedia

In the past couple of days the article "Transhumanism", which I've been working on for some time (along with others, of course, particularly "Loremaster" and "StN") has been given Featured Articled status at Wikipedia - recognition as one of Wikipedia's best articles. This was quite a labour of love, with a lot of negotiation among people who have a range of views, in an effort to get a useful, neutral, comprehensive article. To the extent that we've succeeded, it has shown the Wikipedia process working at its best.

In the past couple of days the article "Transhumanism", which I've been working on for some time (along with others, of course, particularly "Loremaster" and "StN") has been given Featured Articled status at Wikipedia - recognition as one of Wikipedia's best articles. This was quite a labour of love, with a lot of negotiation among people who have a range of views, in an effort to get a useful, neutral, comprehensive article. To the extent that we've succeeded, it has shown the Wikipedia process working at its best.Meanwhile, I've been given admin. status at Wikipedia, as of last week, so it's now incumbent on me to be a paragon of neutrality, civility, etc., there, to try to help in mopping up problems, etc., etc. All which I'm pleased to do whenever I have time.

I do still warn my students who are tempted to run off to Wikipedia that it's a slightly dangerous source. The articles vary greatly in quality, and the whole thing is a work in progress. But I also think that trying to write a comprehensive encyclopedia, available free on-line to the worldwide public, is an unequivocally good thing to do. It's a project I'm glad to give my support.

Sunday, May 14, 2006

Happy birthday, Jenny - for 8 May.

We were celebrating this too much for me to blog it last week - dinner at Vlado's, for the best steak in Melbourne, and a trip to the stage musical production of The Lion King a couple of nights later.

Jenny shares her birthday with Thomas Pynchon. It is also VE day. I share my birthday with Brian Aldiss, Robert Redford, and Alison Goodman. All that seems to be an interesting combination.

Jenny shares her birthday with Thomas Pynchon. It is also VE day. I share my birthday with Brian Aldiss, Robert Redford, and Alison Goodman. All that seems to be an interesting combination.

Sunday, May 07, 2006

Arguing about biological immortality

I owe an account of why I am slightly sceptical about an argument offered by Aubrey de Grey, who has defended the strong claim that there is a moral imperative to "cure" the process of human aging. (I'll henceforth drop the scare quotes around the word "cure", but I intend to signal that I am well aware of the controversies that surround whether the word is apt in this context.)

I owe an account of why I am slightly sceptical about an argument offered by Aubrey de Grey, who has defended the strong claim that there is a moral imperative to "cure" the process of human aging. (I'll henceforth drop the scare quotes around the word "cure", but I intend to signal that I am well aware of the controversies that surround whether the word is apt in this context.) Last time I blogged about this, I received a couple of responses - one castigating me for buying into de Grey's argument and terminology at all, and one for expressing any scepticism about it. Well, you can't please everyone. For the record, my aim is to examine the argument as objectively as I can. Yes, I do take it seriously, but, no, I will not examine it uncritically. Too much is at stake in the debate for me to be other than strictly objective, to the extent that the human condition allows.

My paraphrase of the full argument is set out in a post dated 2 April 2006.

By the end of this post, I will still owe a proper account of what doesn't entirely convince me, but I'll have made a start. My main purpose at this stage is to take a first stab at getting the logic of the argument clear. This will help us see where, if anywhere, it might be vulnerable to attack. It may also help us see whether better formulations of the argument are possible - perhaps my formulation does not do de Grey justice, or perhaps it is possible for him to do some further shoring up.

In what follows I will attempt to strip the argument to its logical bones. Stripped back, then, it seems to involve a main argument supported by several sub-arguments to ground its main premises. I think the total argument looks something like this:

Sub-argument one

P1.1. If we provide substantial funding for radical anti-aging research, then biomedical science will develop technologies capable of stopping, and possibly reversing, the process of aging.

P1.2. If biomedical science develops technologies capable of stopping, and possibly reversing, the process of aging, those technologies will be widely used.

P1.3. If biomedical technologies capable of stopping, and possibly reversing, the process of aging are widely used, then at least some human persons will have their lives extended beyond what would otherwise have been their duration.

C1. If we provide substantial funding for radical anti-aging research, then some human persons will have their lives extended beyond what would otherwise have been their duration.

Sub-argument two

P2.1. (C1.) If we provide substantial funding for radical anti-aging research, then some human persons will have their lives extended beyond what would otherwise have been their duration.

P2.2. If we do not provide substantial funding for radical anti-aging research, then some of those human persons will not have their lives extended beyond what would otherwise have been their duration.

C2. If we do not provide substantial funding for radical anti-aging research, then we will fail to extend the lives of some human persons beyond what would otherwise have been their duration.

Sub-argument three

P3.1. There is no morally salient distinction between shortening the life of a human person against that person's will and failing to save the life of such a person.

P3.2. There is no morally salient distinction between saving the life of a human person and extending the life of such a person beyond what would otherwise have been its duration.

C3. There is no morally salient distinction between shortening the life of a human person and failing to extend the life of such a person beyond what would otherwise have been its duration.

Sub-argument four

P.4.1. It is morally impermissible to shorten the life of a human person against that person's will.

P4.2. (C3.) There is no morally salient distinction between shortening the life of a human person and failing to extend the life of such a person beyond what would otherwise have been its duration.

C4. It is morally impermissible to fail to extend the life of a human person beyond what would otherwise have been its duration.

Main argument

P1. (C2.) If we do not provide substantial funding for radical anti-aging research, then we will fail to extend the lives of some human persons beyond what would otherwise have been their duration.

P2. (C4.) It is morally impermissible to fail to extend the life of a human person beyond what would otherwise have been its duration.

Conclusion. If we do not to provide substantial funding for radical anti-aging research, we act in a way that is morally impermissible.

Where, if anywhere, does this go wrong?

As far as I can see the argument is deductively valid. More accurately, if it is technically invalid at any point, I believe that the problem could be repaired by introducing a few plausible conceptual and empirical claims. For example, we could plausibly stipulate, as a conceptual claim, that we "fail" to bring about a result that would have taken place if and only if we had acted in a way that was a reasonable option for us. We could also stipulate that providing funding for radical anti-aging research is something that is a reasonable option for us. Off-hand, I can see no problems of logic that look irreparable.

Moreover, I suggest that the premises of sub-arguments one and two should all be accepted, for the sake of discussion at least. Even if there are doubts about the likelihood that radical anti-aging research will ever be successful in its ambitions, it seems to me to be worthwhile scrutinising the argument on the assumption that de Grey is justified in his optimism about this empirical issue.

I conclude that any real difficulties will have to be found in the premises introduced within sub-arguments three and four: i.e., premises 3.1. 3.2., and 4.1. However, all of those premises seem to have a great deal that could be said for them. Premise 4.1., essentially the claim that killing a human person is morally wrong, may be too broad, but the most plausible exceptions to it, such as cases of voluntary euthanasia, are not obviously salient to the discussion. Perhaps the premise can be weakened in some ways without the argument collapsing. Premises 3.1. and 3.2. may be controversial, but they may seem almost axiomatic within some moral theories. In short, it will not be straightforward to reject any of these premises.

In a later post, however, I will consider whether 3.1., 3.2., and 4.1 form a mutually consistent set. It looks as if there are some internal tensions among those premises, and that something may have to give. From the viewpoint of my own sceptical (or "experiential" as per Dershowitz) moral theory, these three premises may be open to doubt in any event.

That said, even if we reject one or more of these three key premises, we may still be left with a perfectly good argument to fund research intended to extend human longevity. That is not quite the same as an argument for radical anti-aging research designed to reverse aging or at least stop it totally. We'll track through these complexities when I come back to the argument in a later post.

Alison G's new site

My dear friend Alison Goodman has just unveiled her brand-new designer website, which is almost as neat, cool, and generally fantastic as Alison herself. There's a fair bit of imagery on the site, but it only takes a few seconds to load. Great stuff!

My dear friend Alison Goodman has just unveiled her brand-new designer website, which is almost as neat, cool, and generally fantastic as Alison herself. There's a fair bit of imagery on the site, but it only takes a few seconds to load. Great stuff!I must remember to go and link to it from my own site.

Subscribe to:

Posts (Atom)